Twitter Timeline

FourByThree proposes the development of a new generation of modular industrial robotic solutions that are suitable for efficient task execution in collaboration with humans in a safe way and are easy to use and program by the factory workers.

The project was active for three years, December 2014-2017.

−For the second consecutive year, FourByThree will host a special session at IEEE’s conference on Emerging Technologies And Factory Automation (ETFA). This year, the conference will take place in Limassol (Cyprus), on September 12-15 2017.

FourByThree’s session focuses on safe human-robot collaboration, including currently available technologies, planning and scheduling, verification and dependability, long-term autonomy, as well as the human perspective (trust towards and acceptance of robotics systems).

The session aims to gather contributions in human-robot collaboration that respond to the challene of creating new technologies and robotic solutions based on innovative hardware and software, enforcing efficiency and safety. Such solutions should address real industrial needs, providing suitable applications in possible human-robot scenarios in a given workplace without physical fences, comprising both human-robot coexistence and collaboration.

There is an open call for papers for the session (deadline: April 9). Further information on the session is available at ETFA’s website.

In the factories of the future, achieving safe and flexible cooperation between robots and human operators will contribute to enhancing productivity.

The problem of robots performing tasks in collaboration with humans poses the following main challenges: robots must be able to perform tasks in complex, unstructured environments and, at the same time, they must be able to interact naturally with the workers they are collaborating with, while guaranteeing safety all time.

A requirement for natural human-robot collaboration is to endow the robot with the capability to capture, process and understand accurately and robustly human requests.

Using voice and gestures in combination is a natural way for humans to communicate with other humans. By analogy, they can be considered equally relevant to achieve natural communication also between workers and robots.

In such a multimodal communication scenario, the information coming from the different channels can be complementary or redundant, as shown in these examples:

In the first example, the need for different communication channels complementing each other is evident. However, redundancy can also be beneficial in e.g. industrial scenarios in which noise and variable lighting conditions may reduce the robustness of each channel when considered independently.

Our work in the context of the FourByThree project is focused on a semantic approach that supports a multimodal interaction between human workers and industrial robots, in real industrial settings taking advantage of both input channels, voice and gestures. Some examples of this natural interaction could be:

This natural communication facilitates coordination between both actors, enhancing a safe collaboration between robots and workers.

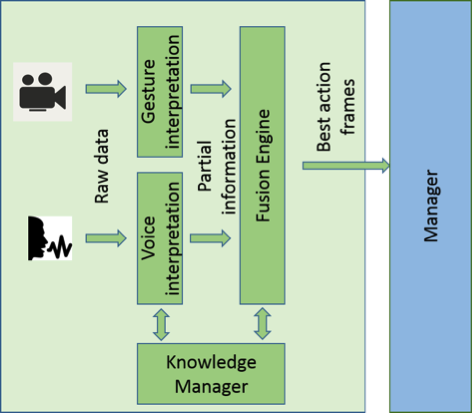

The implemented semantic multimodal interpreter is able to handle voice and gesture-based natural requests from a person and combine both inputs to generate an understandable and reliable command for the industrial robot, facilitating a safe collaboration. For such a semantic interpretation, we have developed four main modules, as it is shown in Figure 1: a Knowledge-Manager module that describes and manages the environment and the actions that are affordable for the robot, using semantic representation technologies; a Voice Interpreter module that, given a voice request, extracts the key elements on the text and translates them into a robot-understandable representation, combining Natural Language Processing and Semantic Web Technologies; a Gesture Interpretation module to resolve pointing gestures and some simple commands like ‘stop’ or ‘resume’; and a Fusion Engine for combining both mechanisms and constructing a complete and reliable robot-commanding mechanism.

Figure 1: Multimodal semantic interpreter approach architecture.

Figure 1: Multimodal semantic interpreter approach architecture.The knowledge manager uses an ontology to model the environment and the robot capabilities, as well as the relationships between the elements in the model, which can be understood as implicit rules that a reasoner can exploit to infer new information. Thus, the reasoner can be understood as a rule engine in which human knowledge can be represented as rules or relations. So, through ontologies, we model the industrial scenarios in which robots collaborate with humans. The model includes robot behaviours, actions they can accomplish and the objects they can manipulate/handle. It also considers features and descriptors of these objects.

For each individual action or object, a tag property data is included, listing the most common expression(s) used in natural language to refer to them, including reference to the language used. An automatic semantic extension of those tags exploiting Spanish WordNet [1] is done at initialization time. In this way, we obtain different candidate terms referring to a certain concept, which will be used by the voice interpreter for voice request resolution.

The relations defined within the ontology between the concepts are used by the interpreter for disambiguation at run-time. This ability is very useful for text interpretation because sometimes the same expression can be used to refer to different actions. For instance, people can use the expression remove to request the robot to remove a burr, but also to remove a screw, depending on whether the desired action is deburring or unscrewing, respectively. If the relationships between the actions and the objects over which the actions are performed are known, the text interpretation is more accurate; it will be possible to discern, in each case, to which of both options the expression ‘remove’ corresponds to. Without this kind of knowledge representation, this disambiguation problem is more difficult to solve.

For our current implementation, two industrial contexts of the FourByThree project have been considered: a collaborative assembly task and a collaborative deburring task. The possible tasks the robot can fulfil in both scenarios have been identified and a knowledge base (KB) created, populating the knowledge manager ontology with instances representing those tasks. The knowledge base also includes the elements that take part in both processes, as well as the relationships they have with respect to the tasks. In this way, the semantic representation of the scenarios will be available to support the request interpretation process, not only to infer which is the desired action to perform, but also to ensure that all the necessary information is available and coherent in order to be possible for the robot to perform it.

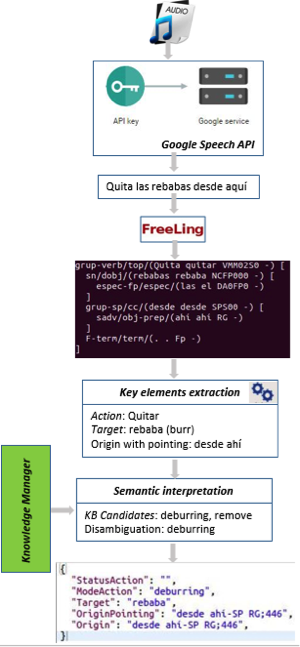

The voice interpreter, taking as input a human request audio, via Google Speech API gets the request content, processes it by Freeling [2], a Natural Language Processing tool, and extracts the key elements on it based on a pattern recognition strategy. Those key elements are searched at the knowledge base of the corresponding scenario, obtaining the potential tasks the robot can perform and better fits the input request, considering all the information available and exploiting reasoning capabilities of the knowledge manager for inferring the most suitable possibilities, as illustrated in Figure 2.

Figure 2: Voice interpreter illustrative execution example.

Figure 2: Voice interpreter illustrative execution example.The gesture recognition approach is a valuable input in the fusion engine, in which both voice and gesture interpreter outputs are combined to compose the complete request according to the decision strategy summarized in Figure 3.

Figure 3: Semantic interpreter fusion strategy

Figure 3: Semantic interpreter fusion strategyAt the end of the process, the interpreter is able to send to the robot the proper command according with the operator request, ensuring that the petition is coherent and has all necessary information for its fulfilment by the robot side.

[1] A. González-Agirre, E. Laparra and G. Rigau, “Multilingual central repository version 3.0: upgrading a very large lexical knowledge base,” in GWC 2012 6th International Global Wordnet Conference, 2012. [2] L. Padró y E. Stanilovsky, «Freeling 3.0: Towards wider multilinguality,» de LREC2012, 2012.

On February 21, members of the FourByThree consortium gathered for a seminar on Exploitation and Commercialization held at IK4-TEKNIKER’s facilities in Eibar, Basque Country, Spain. The workshop was facilitated by European expert Ana Maria Magri.

The proposed tool for guiding the process was the Lean Canvas, an adaptation of the standard Business Model Canvas by Ash Maurya. Participants split into three groups to discuss the main approaches to the market that have already been identified within the consortium: the FourByThree kit or set of hardware and software components, integrated CoBot systems for specific applications, and a marketplace that incorporates contributions from third parties.

The seminar succeeded in its goal to bring up and discuss each strategy, exchanging insights and defining the upcoming steps. Ana Maria Magri stated that the debate on exploitation is already mature within the project, and the remaining nine months seem like a reasonable timeframe to further define and implement the commercial exploitation strategy.

Source: The Conversation

America’s manufacturing heyday is gone, and so are millions of jobs, lost to modernization. Despite what Treasury Secretary Steven Mnuchin might think, the National Bureau of Economic Research and Silicon Valley executives, among many others, know it’s already happening. And a new report from PwC estimates that 38 percent of American jobs are at “high risk” of being replaced by technology within the next 15 years. ![]() But how soon automation will replace workers is not the real problem. The real threat to American jobs will come if China does it first.

But how soon automation will replace workers is not the real problem. The real threat to American jobs will come if China does it first.

Since the year 2000, the U.S. has lost five million manufacturing jobs. An estimated 2.4 million jobs went to low-wage workers in China and elsewhere between 1999 and 2011. The remainder fell victim to gains in efficiency of production and automation, making many traditional manufacturing jobs obsolete.

Though more than a million jobs have returned since the 2008 recession, the net loss has devastated the lives of millions of people and their families. Some blame robotics, others globalization. It turns out that those forces work together, and have been equally hurtful to manufacturing jobs. The car industry, for example, imports more and more parts from abroad, while automating their assembly in the U.S.

As a robotics researcher and educator, I strongly advocate that the best way to get those jobs back is to build on our existing strengths, remaining a leader in manufacturing efficiency and doing the hard work to further improve our educational and social systems to cope with a changing workforce. Particularly when looking at what’s happening in China, it’s clear we need to maintain America’s international competitiveness, as we have done since the beginning of industrialization.

In 2014, China exported more, and more valuable, products than the U.S. for the first time. Many of these were made by the low-wage laborers China has become famous for.

Yet China has also emerged as the largest growth market for robotics. Chinese companies bought more than twice as many industrial robots (68,000) in 2015 than American companies did (27,000). China’s Midea – an appliance manufacturer – just purchased the German robotic powerhouse Kuka.

China has understood that its competitive advantage of cheap labor will not last forever. Instead, labor costs will rise as its economy develops. Look at FoxConn, for example, the Taiwanese manufacturing contractor of the iPhone known for the high-pressure work environment at its plants in China. The company already uses more than 60,000 robots, and has said it wants to use as many as a million robots by 2020.

That’s a bold goal, especially given the current state of robotics. At present, robots are good only at highly repetitive tasks in structured environments. They are still far inferior to humans in simple tasks like picking items from a shelf. But FoxConn’s goal of transforming its streamlined manufacturing line is definitely achievable. Many of the tasks now done by humans thousands of times a day can be easily automated – such as applying a puddle of glue, placing double-sided tape, positioning a piece of plastic, tightening screws or loading products onto a pallet.

The lesson here is simple: Some occupations will simply disappear, like those of weavers in the textile industry displaced by the power loom. We need to embrace this disruption if we want to avoid being taken out of the game altogether. Imagine if China is able to replace our low-wage jobs with its workers, and then can automate those jobs: Work Americans now do will be done here, or anywhere – but not by humans. FoxConn is planning its first plant in the U.S.; soon, Chinese robots will be working in America.

The good news is that while many types of jobs will cease to exist, robots will create other jobs – and not only in the industry of designing new robots.

This is already beginning to happen. In 2014, there were more than 350,000 manufacturing companies with only one employee, up 17 percent from 2004. These companies combine globalization and automation, embracing outsourcing and technological tools to make craft foods, artisanal goods and even high-tech engineered products.

Many American entrepreneurs use digitally equipped manufacturing equipment like 3-D printers, laser cutters and computer-controlled CNC mills, combined with market places to outsource small manufacturing jobs like mfg.com to run small businesses. I’m one of them, manufacturing custom robotic grippers from my basement. Automation enables these sole proprietors to create and innovate in small batches, without large costs.

This sort of solo entrepreneurship is just getting going. Were robots more available and cheaper, people would make jewelry and leather goods at home, and even create custom-made items like clothing or sneakers, directly competing with mass-produced items from China. As with the iPhone, even seemingly complex manufacturing tasks can be automated significantly; it’s not even necessary to incorporate artificial intelligence into the process.

Three trends are emerging that, with industry buy-in and careful government support, could help revitalize the U.S. manufacturing sector.

First, robots are getting cheaper. Today’s US$100,000 industrial robotic arms are not what the future needs. Automating iPhone assembly lines will require cheap robotic arms, simple conveyor belts, 3-D-printed fixtures and software to manage the entire process. As we saw in the 3-D printing industry, the maker movement is setting the pace, creating low-cost fabrication robots. The government is involved, too: The Pentagon’s research arm, DARPA, has backed the OtherMill, a low-cost computer-controlled mill.

In addition, more people are programming robots. Getting a robot to accomplish repetitive tasks in industry – for example, using Universal Robot’s interface – is as simple as programming LEGO Mindstorms. Many people think it’s much harder than that, confusing robotic automation with artificial intelligence systems playing chess or Go. In fact, building and programming robots is very similar both physically and intellectually to doing your own plumbing, electrical wiring and car maintenance, which many Americans enjoy and are capable of learning. “Maker spaces” for learning and practicing these skills and using the necessary equipment are sprouting across the country. It is these spaces that might develop the skill sets that enable Americans to take automation into their own hands at their workplaces.

Lastly, cutting-edge research is improving the hardware needed to grasp and manipulate manufacturing components, and the software to sense and plan movements for assembling complex items. Industrial robot technology is upgradeable and new robots are designed to complement human workers, allowing industry to make gradual changes, rather than complete factory retooling.

To fully take advantage of these trends and other developments, we need to improve connections between researchers and businesses. Government effort, in the form of the Defense Department’s new Advanced Robotics Manufacturing Institute, is already working toward this goal. Funded by US$80 million in federal dollars, the institute has drawn an additional $173 million in cash, personnel, equipment and facilities from the academic and private sectors, aiming to create half a million manufacturing jobs in the next 10 years.

Those numbers might sound high, but China is way ahead: Just two provinces, Guangdong and Zhejiang, plan to spend a combined $270 billion over the next five years to equip factories with industrial robots.

The stakes are high: If the U.S. government ignores or avoids globalization and automation, it will stifle innovation. Americans can figure out how to strengthen society while integrating robotics into the workforce, or we can leave the job to China. Should it come to that, Chinese companies will be able to export their highly efficient manufacturing and logistics operations back to the U.S., putting America’s manufacturing workforce out of business forever.

Author: Nikolaus Correll

Source: Redshift (Madeline Gannon)

The way humans interact with robots has served society well during the past 50 years: People tell robots what to do, and robots do it to maximum effect. This has led to unprecedented innovation and productivity in agriculture, medicine, and manufacturing.

However, an inflection point is on the horizon. Rapid advancements in machine learning and artificial intelligence are making robotic systems smarter and more adaptable than ever—but these advancements also inherently weaken direct human control and relevance to autonomous machines. As such, robotic manufacturing, despite its benefits, is arriving at a great human cost: The World Economic Forum estimates that over the next four years, rapid growth of robotics in global manufacturing will put the livelihoods of 5 million people at risk, as those in manual-labor roles increasingly lose out to machines.

What should be clear by now is that robots are here to stay. So rather than continue down the path of engineering human obsolescence, now is the time to rethink how people and robots will coexist on this planet. The world doesn’t need better, faster, or smarter robots, but it does need more opportunities for people to pool their collective ingenuity, intelligence, and relentless optimism to invent ways for robots to amplify human capabilities.

For some designers, working with robots is already an everyday activity. The architectural community has embraced robots of all shapes and sizes over the past decade: from industrial robots and collaborative robots to wall-climbing robots and flying robots. While this research community continually astounds with its imaginative robotic-fabrication techniques, its scope tends to be limited: This community is primarily concerned with how robots build and assemble novel structures, not how these machines might impact society as they join the built environment.

In my own work, this underexplored territory has become somewhat of an obsession. I have striven to take my training as an architect (my hypersensitivity to how people move through space) and invent ways to embed this spatial understanding into machines. My latest spatially sentient robot—Mimus, created with support from Autodesk—has been living at the Design Museum in London since November 2016 as a part of the museum’s inaugural exhibition, Fear and Love: Reactions to a Complex World. Mimus is a 2,600-plus-pound industrial robot that I reprogrammed to have a curiosity for the world around her. Unlike traditional industrial robots, Mimus has no preplanned movements: She can freely roam around her enclosure, never taking the same path twice.

Ordinarily, robots like Mimus are completely segregated from people, performing highly repetitive tasks on a production line. However, my ambition with Mimus is to illustrate how to design clever software for industry-standard hardware and completely reconfigure human relationships to these complex machines in the process.

Mimus highlights the untapped potential for old industrial technology to work with people, not against them: It shows how small, strategic changes to an existing automation system can take a 1-ton beast of a machine from spot-welding car chassis in a factory to following a child around a museum like a puppy. While this interactive installation may still stoke some anxieties, my hope is that visitors leave Mimus expecting a future when more human-centered interfaces foster empathy and companionship between people and machines.

To be clear, I do not anticipate interactions with autonomous industrial robots to become a normal daily activity for most people. These machines are beginning to move out of factories and into more dynamic settings, but they will likely never stray too far from semicontrolled environments, like construction sites or film sets. However, experiments such as Mimus provide an opportunity to develop and test relevant interaction-design techniques for the autonomous robots that are already roaming skies, sidewalks, highways, and cities.

These newer, smarter robots—like drones, trucks, or cars—share many attributes with industrial robots: They are large, fast, and potentially dangerous nonhumanoid machines that don’t communicate well with humans. For example, in a city like Pittsburgh, where crossing paths with a driverless car is now an everyday occurrence, a pedestrian still has no way to read the vehicle’s intentions (which can be potentially disastrous). Mimus communicates her internal state of mind through body language: When someone approaches from far away, she looks at the person from above with intimidating, suspicious posturing. But as a visitor nears her enclosure, she approaches from below with excited, inquisitive posturing.

As intelligent, autonomous robots become increasingly prevalent in daily life, it is critical to design more effective ways to interact and communicate with them. In developing Mimus, I found a way to use the robot’s body language as a medium for cultivating empathy between museumgoers and a piece of industrial machinery. Body language is a primitive, yet fluid, means of communication that can broadcast an innate understanding of the behaviors, kinematics, and limitations of an unfamiliar machine. When something responds to people with lifelike movements––even when it is clearly an inanimate object––humans cannot help but project emotions onto it. However, this is only one designed alternative for how people might better cohabitate with autonomous robots. Society needs many more diverse and imaginative solutions for the various ways these intelligent machines will immerse themselves in homes, offices, and cities.

Deciding how these robots mediate human lives should not be in the sole discretion of tech companies or cloistered robotics labs. Designers, architects, and urban planners all carry a wealth of knowledge about how living things coexist in buildings and cities—insight that is palpably absent from the robotics community. The future of robotics has yet to be written, and whether a person identifies as tech-savvy or a Luddite, everyone has something valuable to contribute toward deciding how these machines will enter the built environment. I am confident that design and robotics communities can create a future in which technology will expand and amplify humanity, not replace it.